Features

Safety

MANAGING OUR SAFETY RISKS

On March 16, 2010, the TSB issued its Watchlist of the nine safety issues in transportation that pose the greatest risk to Canadians. The Board identified the oversight and implementation of SMS by the entire transportation industry as one of these risks. This article describes some of the challenges and lessons learned by companies as they implement SMS. While it draws on examples from aviation, similar issues can be found in other safety critical organizations.

May 13, 2010 By Kathy Fox

On March 16, 2010, the TSB issued its Watchlist of the nine safety issues in transportation that pose the greatest risk to Canadians. The Board identified the oversight and implementation of SMS by the entire transportation industry as one of these risks.

|

|

| Through its Watchlist, the TSB has established the greatest risk factors in transportation to Canadians. PHOTO: archives |

This article describes some of the challenges and lessons learned by companies as they implement SMS. While it draws on examples from aviation, similar issues can be found in other safety critical organizations.

The roots of SMS

Organizations manage competing and often conflicting goals and priorities (such as safety, customer service, productivity, and return on investment) usually in the face of risk and uncertainty. How can they recognize when they are drifting outside the boundaries of safe operations while focusing on their other priorities?

The International Maritime Organization (IMO) and the International Civil Aviation Organization (ICAO) recognized that traditional approaches to safety management based primarily on compliance with regulations, reactive responses following accidents and a ‘blame and punish’ philosophy were insufficient to reduce accident rates. Additionally, a number of researchers looked beyond human error as accident causes to examine organizational factors. They examined the goals and expectations organizations place on employees and their role in contributing to accidents. From this, they came up with approaches to enhance an organization’s ability to anticipate failure and rebound from unexpected events, which are the basis for SMS.

What is SMS?

SMS is generally defined as a formalized framework for integrating safety into an organization’s daily operations, including the necessary organizational structures, accountabilities, policies and procedures, so that as James Reason states, “… it becomes part of that organization’s culture, and of the way people go about their work” (See sidebar: Reason 2001:28). While individual employees routinely make decisions about risk, SMS focuses on organizational risk management, since organizations create the context where work gets done by setting priorities and providing resources such as staff, tools and training. (See sidebar: Dekker 2005).

SMS was first introduced in the 1980s in the chemical industry and gradually migrated to other safety critical industries. It was often introduced following high profile, tragic accidents recognizing the need for a better way to prevent them. Companies also adopted SMS as a result of new regulations.

SMS is sometimes misconstrued as a form of deregulation or industry self-regulation. In fact, SMS is a framework that enables companies to better manage safety risks. Just as they rely on financial and HR management systems to manage their financial assets and human resources so too they should have systems to manage safety. This does not preclude the need for effective regulatory oversight.

Lessons learned about SMS implementation

The TSB has been investigating accidents in the SMS environment since the early 2000s. Over the years, a number of TSB investigations found deficiencies related to developing and implementing SMS, including:

- Problems with risk analysis

- Goal conflicts

- Employee adaptations

- Early warning signs of hazardous situations (weak signals) not recognized or addressed

- Non-reporting of incidents

The challenges of risk analysis

The biggest challenge of risk analysis is thinking of all of the possible ways things might go wrong, especially when complex systems and procedures interact with each other. Accident investigations often revealed shortcomings in how operators analyze risks. For example, some risk analysis processes were either too informal or participants were not sufficiently knowledgeable to identify all of the potential hazards. Companies often did not recognize the impact of operational changes, equipment design factors, operator training and experience on safety.

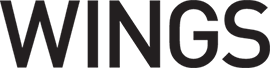

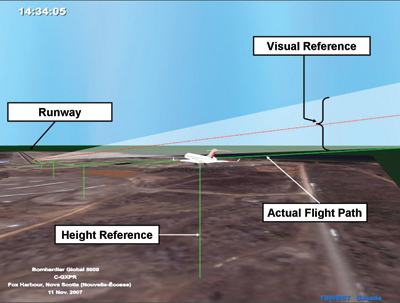

During its investigation into why a Bombardier Global 5000 business jet touched down short of the runway in Fox Harbour, N.S., in 2007, the TSB found that business aircraft operators were free to implement SMS on their own terms with no fixed timeframe. In contrast, commercial operators were required to implement SMS in stages on a fixed timeline. This meant that many operators did not have a fully functioning SMS, including the operator of the accident aircraft.

In the Fox Harbour accident, the operator did not properly assess the risks of introducing the Global 5000 into its operations, in accordance with safety management principles. As such, the Board recommended that the Canadian Business Aviation Association (CBAA) set SMS implementation milestones for private operator certificate holders and that Transport Canada ensure that the CBAA implement an effective quality assurance program for auditing certificate holders.

On March 16, 2010, Transport Canada announced that it would take back responsibility for the certification and oversight of business aircraft from the CBAA. This change will take effect on April 1, 2011.

Goal conflicts – drift into failure

Organizations can slowly drift into failure as employees and managers undertake what Sidney Dekker describes as the “normal processes of reconciling differential pressures on an organization (efficiency, capacity utilization, safety) against a background of uncertain technology and imperfect knowledge” (See sidebar: Dekker 2005:43).

There is a routine tension between acute production goals and ongoing safety goals which may prompt people to make decisions that are riskier than they realize. In describing why deteriorations in safety defences leading up to two accidents had not been detected and repaired, James Reason suggested “the people involved had forgotten to be afraid…If eternal vigilance is the price of liberty, then chronic unease is the price of safety” (See sidebar: Reason 1997:37).

Drift into failure was evident in the TSB’s investigation into the 2004 crash on takeoff of a Boeing 747 freighter at Halifax International Airport. As the crew had been on duty for 19 hours, fatigue likely contributed to a data input error which led to the incorrect calculation of takeoff speeds and engine power settings. Although the company had implemented a maximum 24-hour duty day, the report noted that this crew would have been on duty for about 30 hours had the flight reached its destination. As routes expanded, the crewing department gradually scheduled more flights in excess of the 24-hour limit. This routine non-adherence to company procedures contributed to an environment where some employees and company management felt that it was acceptable to deviate from established policies and procedures when it was considered necessary.

Employee adaptations – putting procedures into practice

There is frequently a mismatch between how written procedures specify work should be performed and how work actually gets done which may lead to drift into failure (Dekker, 2003).

|

|

| In the Fox Harbour accident, the crew adopted a company-sanctioned practice of “ducking under” visual glide slope indications to land near the beginning of a short, wet runway. PHOTO: tsb

|

Front line workers often create “locally efficient practices” to get the job done, while facing pressures such as limited time and resources and multiple goals. As Dekker points out, “Past success is taken as a guarantee of future safety. Each operational success achieved at incremental distances from the formal, original rules can establish a new norm….Departures from the routine become routine…violations become compliant behavior” (See sidebar: Dekker, 2003:236).

In the Fox Harbour accident, the crew adopted a company-sanctioned practice of “ducking under” visual glide slope indications to land near the beginning of a short, wet runway.

In the TSB investigation of a 2007 crash following an overshoot of a Beech King Air at Sandy Bay, Sask., the TSB found that deficiencies in the operator’s supervisory activities permitted substantial, widespread and undetected deviations from standard operating procedures.

A fuller understanding of why gaps exist between written procedures and real practices will help organizations intervene more effectively than simply telling workers to “Follow the rules!” or “Be more careful!”

‘Weak signals’ undetected

In several accidents, early warning signs of hazardous situations (“weak signals”) were either not recognized or effectively addressed. By their nature, weak signals may not be sufficient to attract the attention of busy managers, as they are stretched too thin or focused on other priorities. It may also reflect on a company’s limited capabilities to gather and analyze this information effectively. In a recent article (2009), William Voss, President and CEO of Flight Safety Foundation said: “As random as these recent accidents look, though, one factor does connect them. We didn’t see them coming and we should have… the data were trying to tell us something but we weren’t listening (See sidebar: Voss, 2009).”

For example, in the Sandy Bay accident, the TSB found that crew coordination was inadequate to safely manage the risks associated with the flight. While company managers identified and addressed some crew pairing issues, they were unaware of the extent to which they could impair

effective crew coordination.

Incident reporting

One way to amplify weak signals may be to receive data from multiple sources. Confidential, internal incident reporting systems, which are an integral part of an effective SMS, can be a rich source of hazard information. But people won’t report their mistakes if they are afraid of being punished, representing a lost opportunity for organizational learning and risk reduction (Dekker, 2007).

|

|

| In the Fox Harbour accident, the operator did not properly assess the risks of introducing the Global 5000 into its operations, in accordance with safety management principles. PHOTO: tsb

|

During the Sandy Bay investigation, the TSB found that:”In an SMS environment, inappropriate use of punitive actions can result in a decrease in the number of hazards and occurrences reported, thereby reducing effectiveness of the SMS.”

The way ahead

Implementing SMS does not and realistically cannot totally immunize organizations against failing to identify hazards and mitigate risks, ensure that goal conflicts are always reconciled in favour of safety or avoid insidious adaptations and drift.

While SMS is an effective way of managing risk, its success depends on how it is implemented. SMS requires complete commitment from senior management, a significant investment of time and resources, a solid safety culture that permeates the organization and formal, documented processes that actually work for the operator. As well, regulators still need to provide effective safety oversight, especially during the transition to the SMS environment.

Organizations implementing SMS can and should learn from accident investigations since they also demonstrate patterns of accident pre-cursors (e.g. not thinking through what might go wrong, not having an effective means to track and highlight recurrent safety deficiencies, insufficient training and/or resources to deal with unexpected events).

As the implementation of SMS matures, the TSB will continue to investigate how effectively operators manage safety risks and we will communicate the lessons we learn to regulators, the transportation industry and the Canadian public.

| THE TSB

The Transportation Safety Board of Canada (TSB) advances safety by investigating selected air, rail, marine and pipeline accidents. TSB investigations often examine the influence of organizational factors, including the development and implementation of Safety Management Systems (SMS). References Dekker, S. (2003). Failure to adapt or adaptations that fail: contrasting models on procedures and safety. Applied Ergonomics, 34, 233-238. Dekker, S. (2005). Ten Questions About Human Error: A New View of Human Factors and System Safety. Lawrence Erlbaum Associates, Inc. Dekker, S. (2007). Just Culture: Balancing Safety and Accountability. Ashgate Publishing Company. Reason, J. (1997). Managing the Risks of Organizational Accidents. Ashgate Publishing Ltd. Reason, J. (2001). In search of resilience. Flight Safety Australia, September-October, 25-28. Voss, W. R. (2009, May). Listening to the Data, Flight Safety Foundation. AeroSafetyWorld. TSB Investigation Reports: Aviation Investigation Report A07C0001, Collision with Terrain, Transwest Air, Beech A100 King Air C-GFFN, Sandy Bay, Saskatchewan, January 7, 2007. Aviation Investigation Report A07A0134, Touchdown Short of Runway, Jetport Inc., Bombardier BD-700-1A11 (Global 5000) C-GXPR, Fox Harbour Aerodrome, Nova Scotia, November 11, 2007. TSB Watchlist http://www.bst-tsb.gc.ca/eng/surveillance-watchlist/index.asp |

Kathy Fox is a board member of the TSB of Canada. Her experience includes air traffic controller, commercial pilot, flight instructor, management positions at Transport Canada and VP of Operations at Nav Canada. She holds a Bachelor of Science and a Master’s in Business Administration from McGill University. She also holds a Master of Science in Human Factors and System Safety from Lund University in Sweden.